In order to show the high-level scientific research achievements of the school and foster the scientific research enthusiasm of all teachers and students, the school sets up a special column of “Research Express” on its Official Accounts and updates one issue every month. The column will continuously focus on the latest scientific research achievements of each unit and make systemic reporting to promote the academic exchange and mutual learning, the industry-university-research cooperation and the commercialization of research findings and help the school’s scientific research to climb to new heights in adhering to principles and pursuing innovation.

Recently, the SMBU scientific research teams have made significant progress in many aspects.

Now let’s have a look.

Achievement 1: 1Msps 16bits high-speed high-precision low-power SAR ADC chip

Field: Semiconductor and integrated circuit industrial cluster—mixed-signal integrated circuit design, ADC (analog-to-digital converter) chip

Project introduction:

Pain points: With one comparator only, SAR ADC can realize multilevel quantization in the form of time-sharing quantization, and its internal CDAC has no internal DC channel and the whole ADC inside requires no high-precision modular circuit. Compared with other types of ADC, it is featured by simple structure, low power consumption and good technological scalability and can fully obtain the benefits of circuit technological progress. Therefore, it has been extensively applied in academic circle and industrial circle.

The Capacitor Digital-to-Analog Converter (CDAC) based on charge redistribution, as a core component of SAR ADC, decides the thermal noise, conversion speed and nonlinearity and other many performances. The larger the CDAC capacitor unit is, the less the thermal noise caused by the SAR ADC sampling of input signal is; in the meantime, the better the matching performance between the capacitor units is, the less the difference between the weight value of SAR ADC on each Bit and its ideal value is. Therefore, the better linearity can be obtained in this way. Although the increase in the CDAC capacitor unit area can bring the above advantages, the larger capacitance will also mean the higher dynamic power consumption during charging and discharging, in addition to the increase in the SAR ADC area; furthermore, the larger capacitance will also prolong the setting time of output voltage when the CDAC switching, thus reducing the SAR ADC conversion speed.

According to above analysis, the size of the CDAC capacitor unit in SAR ADC must compromise between the linearity and the conversion speed. For the type of ADC, therefore, the design pain point is how to ensure no deterioration of the ADC linearity while improving the SAR ADC conversion speed.

Solutions: For the design pain points of SAR ADC, the project team adopted the digital solutions based on the LMS background calibration to calibrate the quantization weight coefficient in SAR ADC in a real-time manner. This cannot only adopt the minimum capacitor unit area in the design of SAR ADC but also make the real-time compensation for many drift factors that may affect performance, including the change in chip ambient temperature and the change in packaging stress. Also, the above calibration requires no the external high-quality input signal or the high-performance reference ADC. Therefore, this solution is a high-performance low-cost solution.

The solutions of the design are as follows: inject one pseudo-noise code stream at the SAR ADC analog input port and convert its quantization result to decimal value in digital domain, subtract the random injected code stream, and make the above difference and the random injected code stream for correlation operation. If the summation weight coefficient is equal to the weight of actual Bit of SAR ADC when the quantization result is converted to decimal value, then the random-injected simulation perturbation can be eliminated and the relevant operation result can tend to zero; otherwise the relevant operation result will not be equal to zero but directly proportional to the mismatch of weight coefficient. Take the above operation result as the input signal of LMS algorithm to make real-time adjustment of the actual Bit weight of SAR ADC. By selecting appropriate step factor, it is allowed to find out the weight deviation due to the capacitor mismatch during the SAR ADC quantization, thus realizing the calibration and elimination of the ADC nonlinearity.

Competitive advantage analysis: by operating mode, the SAR ADC can include foreground calibration and background calibration. The former must occur before work to test the ADC by special signal and instrument and conduct laser trimming or store calibration factor in on-chip memory depending on results. This will increase the packaging and testing cost of chip. The latter requires no additional calibration and has no effect on the ADC normal operation, so the latter is featured by low cost, real-time compensation and complex implementation technology.

By calibration means, the SAR ADC nonlinear calibration can include analogue calibration and digital calibration, of which the analogue calibration often requires additional calibration circuit for the nonlinear calibration in the special calibration period, thus affecting the ADC normal operation and reducing the ADC conversion speed; the digital calibration has no above requirement, realizing the background calibration completely.

Market application prospect: with the rising health consciousness, the medical detection devices will gradually find its way into every family. The medical electronics are likely to be another huge growth point of semiconductor industry after wireless communication, cloud computing and IoT. In the future, the miniaturization and intelligence of the medical instruments and devices will be one development trend. Currently, the medical devices such as the digital X-ray machine, magnetic resonance imaging and other medical imaging systems have very high requirements on ADC performance, often including high precision (greater than 16 bit), very wide SFDR (Spurious-Free Dynamic Range) range and high linearity. In order to reduce the number of interlaced channels, the single-channel ADC requires the high sampling rate up to hundreds of kilohertz ~ several megahertz per second. Additionally, the system power consumption shall be as low as possible. To meet the type of ADC, the SAR (Successive Approximation Register) ADC architecture is often adopted. Furthermore, the foreign famous ADC design companies including ADI and TI have launched a series of high-precision SAR ADC products. Similarly, in the foreign academic circle, there have been certain research achievements [8] and [15] in the field of high-performance SAR ADC. However, the domestic academic and industrial circles start relatively late in such research and have less experiences and technology accumulation. Therefore, the high-precision low-power SAR ADC designed by the project team will have broad application prospects in medical electronics, channel separation PLC analog input module, testing and measurement and battery monitoring.

Development planning: it is planned to make achievement transformation in the form of IP licensing or buyout.

Intellectual property: full intellectual property

Cooperation needs: First seek the system integration companies and chip design companies who need high-precision ADC chip and IP and then cooperate with them in the form of technology buyout and IP licensing.

Team introduction: the scientific research team is mainly the team led by Ge Binjie of engineering department and it focuses on the research and development of high-performance ADC chip, including the design and application of the high-speed high-precision low-power SAR ADC and high-precision Sigma-Delta ADC for application signal chain.

Achievement 2: Image segmentation method, system, terminal and storage medium based on parallel network framework and dynamic fusion

Field: High-end medical device industrial cluster--AI wisdom medical

Project introduction:

Pain points:

Multi-modal data missing: in clinical practice, the MRI sequences (T1, T1ce, T2, FLAIR) may often be missing due to scan protocol difference, equipment failure or patient compliance and other reasons, resulting in sharp performance reduction of the traditional deep learning model in case of incomplete input.

Local and global feature trade-off: Although the Convolutional Neural Network (CNN) can extract local texture efficiently, it is difficult to capture long-distance dependency; Transformer can realize the global modeling, but the 3D medical image computing is expensive and cannot apply to the high-resolution volume data.

Insufficient quantization of uncertainty: in many methods, the KL divergence is adopted to measure distributional difference, which is lack of robustness for noise and missing data and easy to cause overfitting or underfitting.

Relatively weak model generalization performance: the existing models often conduct training on fixed modality sets, which fail to flexibly adapt to any missing mode and are lack of dynamic adjustment strategy.

Solutions:

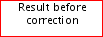

Parallel Mamba state space frame: integrate the efficient Mamba structural state space model (SSM) into U-Net parallel channel, utilize the Gated Space Convolution (GSC) to extract multi-scale local features and at the same time model the global dependency by using the space state module.

AIC (Akaike information criterion) driven knowledge distillation: introduce the Hölder divergence based on Dirichlet distribution, accurately quantize the difference between predictive distribution and truth-value distribution; maximize the feature association between the completeness and loss modalities by using the mutual information loss, improve the completeness of potential expression.

Dynamic sharing (DS) mechanism: dynamically adjust network parameters and fusion weight based on the actual available mode, requiring no individual model training for each missing mode, and realizing the uniform and flexible match with “any missing”.

Theoretical support: propose and verify three principle theorems of stability, robustness and fusion faculty about the Mamba module under the missing modality scenarios to provide mathematical supports for methodological convergence and reliability.

Fig.1 Elastic missing modality MRI segmentation framework based on Mamba state space modeling and AIC. The method effectively realizes the robustness segmentation of missing MRI modality by integrating the Hölder divergence drived by the Dirichlet distribution and the mutual information enhanced knowledge transfer mechanism.

Fig.2 Visual result of medical image segmentation of the algorithm

Competitive advantage analysis:

Computing efficiency: compared with the expensive Transformer, the fully-parallel Mamba SSM requires the computation of linear convolution only, significantly reducing the computation and memory costs.

Strong robustness: the knowledge distillation and mutual information constraint based on the Hölder divergence enables higher tolerance of the model to noise and missing data; DSC is improved by about 5% on average for BraTS and CHAOS datasets.

High applicability: one training can meet any modality sets, without repeated fine adjustment of various missing conditions, largely simplifying the deployment and maintenance cost.

Reliable theory: prove the convergence and stability of core modules in the form of theorem, meeting the strict medical imaging requirements for interpretability and reliability.

Open-source verification: the code has been in GitHub to facilitate secondary development.

Market application prospects:

Accurate diagnosis of tumor: it can realize the seamless integration into neurosurgery department and radiation treatment planning system to help doctors to complete high-precision segmentation of lesions in case of missing sequence.

Segmentation of abdominal organs: it performs well in CHAOS and other multi-modality segmentation tasks of liver, kidney and spleen and is expected to be extended to the automatic annotation and preoperative evaluation of various organs.

Multi-modality fusion platform: it can be extended to CT, PET and other many imaging data fusion to provide low-level components for wisdom medical treatment, remote diagnosis and AI image analysis cloud services.

Industrialization opportunity: with the rapid growth of the domestic and foreign medical image AI markets, there will be efficient algorithm, flexible deployment, quantifiable performance and other advantages for commercialization.

Development planning: it is planned to promote the achievement transformation by means of joint venture or technology licensing, take the technology as the low-level components for wisdom medical treatment, remote diagnosis and AI image analysis cloud services, and face with the application scenarios in great demand to practically serve the industrial development and the people's wellbeing.

Intellectual property: Cheng Runze, Li Chun et al, application No./patent No.: 202510429723.1, Image segmentation method, system, terminal and storage medium based on parallel network framework and dynamic fusion

Cooperation needs: we sincerely invite medical organizations, imaging centers, AI algorithm teams, software integrators, investment organizations and other partners to jointly promote the industrialization and clinical application of the elastic missing modality MRI segmentation technology. Specifically, the clinical organization will provide multi-center and multi-sequence MRI data and use feedback, the AI teams and the software integrators will jointly optimize the model lightweight design and accelerate the reasoning and the connection with HIS/PACS, the investment organizations will support project registration certification and market promotion, the industry alliance will participate in the development of technical standards, and the cloud service providers will provide the elastic computational and data security supports, jointly building an efficient and reliable medical image AI solution to benefit for patients and medical practitioners.

Team introduction: the team led by Li Chun belongs to the SMBU Applied Mathematics Joint Research Center and it focuses on medical image analysis, computer vision and deep generative models (including GAN, VAE and diffusion model), including theoretical and application research. In recent years, the team has participated in multiple scientific research tasks including National Natural Science Foundation of China, basic and applied basic research project of Guangdong Province and universities and colleges stable support program of Shenzhen City and obtained rich achievements, including nearly 20 papers published in IEEE, TIP, TMM, JBHI, ICME and other international top journals and conferences, and application and authorization of multiple national invention patents in terms of 3D sparse view reconstruction, missing modality segmentation and multi-task inverse imaging, etc. The team has strong algorithm development ability to work at the conversion from the cutting-edge AI technology into the highly reliable medical image products and serve the wisdom medical treatment and remote diagnosis.

Achievement 3: Imaging reconstruction method, system, terminal and storage medium based on hybrid network framework

Field: High-end medical device industrial cluster--AI and computer vision

Project introduction:

Pain points:

Inherent complexity of inverse problems: in the computational physics and imaging science, the inverse problems (such as inference of source position from scattered field data, or recovery of original image from damaged image) are morbid in essence. This means that the solution is neither unique nor stable and very sensitive to the input data noise and sparsity. The traditional numerical method is sharply reduced in performance and efficiency when facing the complex situations including nonlinearity, multi-sources or bad data quality.

Limitation of existing deep learning method: although the deep learning has achieved success in many fields, there will still be challenging when it is directly applied to the complex physical inverse problems. For example, the standard deep learning model may be difficult to fuse into physical law as constraint, resulting in the physical difference of predicted results. Although the operator learning network including DeepONet is powerful, there may still be the risks of slow convergence, heavy calculation burden and sparse/noise data overfitting.

Physical constraint and data driving fusion difficulties: how to effectively combine the physical priori knowledge (such as Navier-Stokes equation) and the data-driven learning paradigm is a core difficulty. The simple dependence on data driving may require mass high-quality data while the simple dependence on physical model is difficult to process the complex realistic scenarios. Currently, the fusion of these two methods is not deep, resulting insufficient generalization and robustness of model.

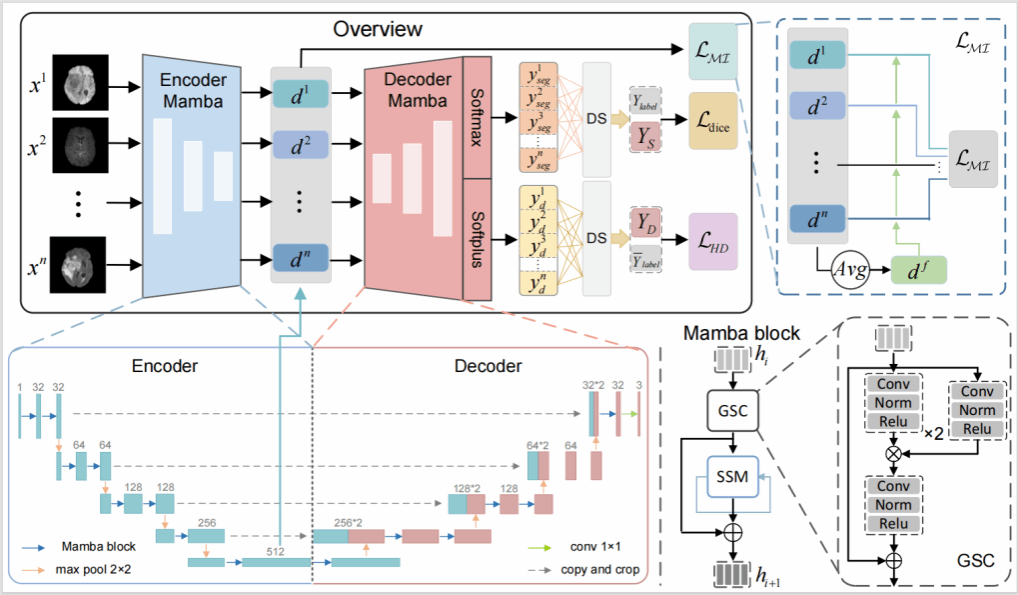

Solutions: this research proposes an innovative hybrid framework combining the deep operator network (DeepONet) with the neural tangent kernel (NTK) to solve the complex inverse problems in an efficient and robust manner.

Core architecture-DeepONet operator learning: centering on DeepONet, learn the nonlinear operator from input function space to output function space. Its unique “branch-backbone” network structure can effectively decouple the different aspects of input function and capture the complicated relation between functions.

Training dynamics optimization- NTK integration: creatively integrate the neural tangent kernel (NTK) into the training process. NTK can analyze and predict the training dynamics of neural network theoretically. By monitoring and utilizing the NTK nature, this framework can:

a) stabilize training process: Linear training dynamics to avoid gradient vanishing or explosion and ensure stable convergence of model.

b) accelerate convergence: Use the NTK spectrum characteristics to realize the adaptive adjustment of learning rate and find out the optimum convergence path.

c) improve generalization: Control the model complexity to avoid the sparse or noise data overfitting.

Hybrid loss function design: one composite loss function is designed for close integration of data driving and physical constraint:

• Data driving loss: Ensure the match between model output and observation data.

• Physical information loss: Take the physical law including Navier-Stokes equation as soft constraint to punish the solution that fails to comply with the physical law.

• Perception loss: In the image reconstruction task, utilize the pre-training network (such as VGG) to extract high-level feature, ensuring that the reconstructed image is more nature and clear visually.

Fig.1: Detailed structure chart of hybrid DeepONet-NTK framework, showing a whole process from input to feature extraction then to NTK assisted optimization and final loss function computation.

Competitive advantage analysis:

Excellent performance, higher precision: the experimental results show that the framework has higher performance than the existing baseline models (such as VAE, VQVAE, DDPM, etc.), whether in the source location task driven by the Navier-Stokes equation or in the standard image reconstruction tasks (such as CIFAR-10/100, MNIST, etc.). Both the quantitative indicators (PSNR, SSIM) and the qualitative results demonstrate its excellent precision and detail recovery capability.

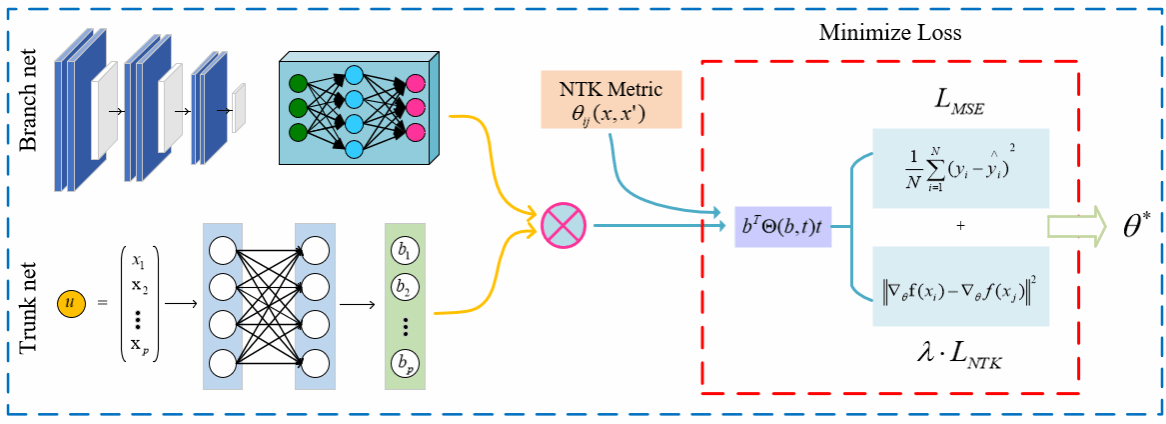

Fig.2 Image reconstruction comparison drawing of paper Fig.4

Fig.2 shows the image reconstruction effects in many data sets. The image generated by this method is significantly superior to other methods in terms of definition, detail preservation and artifact suppression.

Strong robustness, wide applicability: by combining NTK and physical constraint, the model enhances its robustness to noise and data sparsity greatly. It cannot only process the problems under the ideal conditions but also provide reliable solutions in the realistic scenario where the data is limited or bad quality. The framework has strong universality and has been proved in two major fields including fluid dynamics and computer vision, showing its huge potential as the general inverse problem solver.

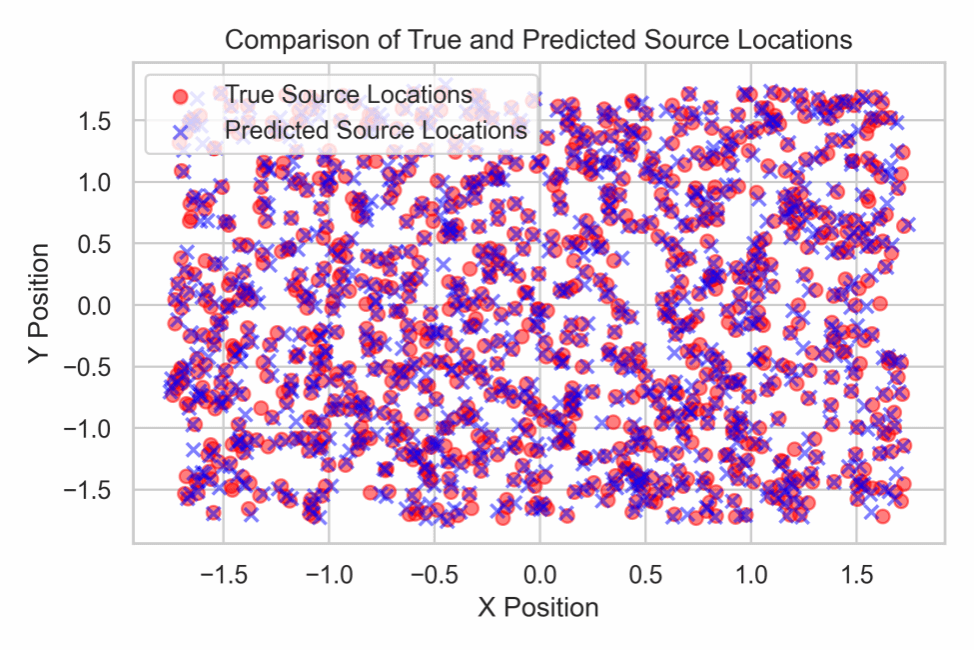

Fig.3 Navier-Stokes inverse problem solving result

The figure shows the high coincidence between the source position (red) of model prediction and its true position, proving its high precision in the complex physical scenarios.

Efficient and stable training: the introduction of NTK solves the difficult network training, slow convergence and other problems of traditional operator. It provides the theoretical supports and optimization guidance for training process, enabling that the model can achieve its optimal state and reduce its dependence on computational resource and parameter adjustment technique to facilitate engineering deployment.

Strong physical interpretability: different from the black-box model, this framework fuses physical law into model by the physical information loss function. Its output is not only correct in data and but also more consistent and reliable physically. This is critical for medical treatment, scientific research, engineering and other fields that have higher requirement on safety and reliability.

Market application prospects:

Medical image analysis:

• Fast MRI/CT reconstruction: reconstruct medical image from the undersampled k-space data in a high-quality and fast manner, shorten scanning time, reduce motion artifact, and enhance patient experience.

• Image denoising and enhancement: improve the quality of low-dose scan images, reduce radiation risks, and maintain the accuracy of diagnostic information.

• Functional imaging source localization: locate the sources of brain neural activity accurately in magnetoencephalogram (MEG) or electroencephalogram (EEG).

Industrial and engineering fields:

• Non-destructive testing (NDT): with the ultrasonic or electromagnetic scattering data, accurately identify the internal cracks and defects of materials, suitable for safety inspections in aerospace and energy pipelines.

• Computational fluid dynamics (CFD): in the aircraft design, weather forecast and other fields, conduct inverse algorithm of the flow field force sources or boundary conditions based on the limited observation data to optimize design and prediction.

Geophysical and environmental monitoring:

• Earthquake source localization: rapidly and accurately invert the source position, depth and magnitude of earthquake based on earthquake wave data recorded by ground stations.

• Pollution source tracking: reversely track the position and emission rate of pollution sources by sparse sampling of pollutant concentrations in water or air.

Consumer-grade applications:

• Image/video inpainting: develop more powerful software functions including old photo restoration, image demosaicing, and video super-resolution to enhance the digital media content quality of users.

Development planning: it is planned to promote the achievement transformation by means of technology licensing, joint venture or independent operation, building the framework into a new generation of basic core algorithm engine. We will first provide the solutions that are accurate, robust and consistent with physical law for rapid reconstruction of medical image, industrial NDT, CFD, geophysical prospecting and other high-value application scenarios. In the long term, we will be committed to build an universal and efficient cloud platform for solving inverse problems, empowering extensive scientific research and industrial innovation, and solving the long-standing "reverse engineering" and data recovery problems in various industries.

Intellectual property: Fang Yuhao, Li Chun et al, application No./patent No.: 2025104458178, Imaging reconstruction method, system, terminal and storage medium based on hybrid network framework

Cooperation needs: we sincerely invite equipment manufacturers, industrial NDT enterprises, geophysical service companies, AI algorithm teams, software integrators, prospective investment organizations and other partners to jointly promote the industrialization and further application of the hybrid inverse problem solving framework. We hope that the industrial applicants can provide the true and challenging inverse problem scenarios and data sets (such as undersampled medical image, industrial scattered field signal, etc.) for model verification and iterative optimization; the AI teams and the software integrators can jointly conduct model lightweight design, deployment acceleration and wireless connection with existing work flow; the investment organizations can provide financial and strategic resource supports for technology development, productization and market expansion. We also hopes to cooperate with various parties for building one cross-border efficient and reliable AI computational physics and imaging solution, overcoming the key technology bottleneck and serving the high-end manufacturing, precision medicine, energy security and other important fields.

Team introduction: the team led by Li Chun belongs to the SMBU Applied Mathematics Joint Research Center and it focuses on medical image analysis, computer vision and deep generative models (including GAN, VAE and diffusion model), including theoretical and application research. In recent years, the team has participated in multiple scientific research tasks including National Natural Science Foundation of China, basic and applied basic research project of Guangdong Province and universities and colleges stable support program of Shenzhen City and obtained rich achievements, including nearly 20 papers published in IEEE, TIP, TMM, JBHI, ICME and other international top journals and conferences, and application and authorization of multiple national invention patents in terms of 3D sparse view reconstruction, missing modality segmentation and multi-task inverse imaging, etc. The team has strong algorithm development ability to work at the conversion from the cutting-edge AI technology into the highly reliable medical image products and serve the wisdom medical treatment and remote diagnosis.

Achievement 4: Intracellular aggregation of exogenous molecules for biomedical applications

Associate Processor Wang Haoran from SMBU Faculty of Materials Science has recently published a review paper titled “Intracellular aggregation of exogenous molecules for biomedical applications” in the world-famous journal Chemical Society Reviews (DOI: 10.1039/d5cs00141b), with Associate Processor Wang Haoran as the corresponding author and the co-first author, and Academician Tang Benzhong from Chinese University of Hong Kong (Shenzhen), Processor Xu Wanhai from Harbin Medical University and Processor Cheng Dongbing from Wuhan University of Technology as co-corresponding authors. This paper reveals the clustering mechanism of exogenous molecules in cells, providing new solutions for cancer treatment and precise imaging.

Link to the paper:

https://mp.weixin.qq.com/s/Ay2nxA77hEr1gipz_CtWAQ

Achievement 5: Legal logic and challenges and solutions of Chinese e-commerce platform credit management

Recently, the research paper Legal Logic and Questions and Improvement of Chinese Electronic Commerce Platform Credit Management (in Russian: Правовая логика, проблемы и пути совершенствования кредитного управления на платформах электронной коммерции в Китае) jointly written by Teacher Yang Tianfang from SMBU Sino-Russian Comparative Law Research Center and Teacher Guo Yu from Inner Mongolia University Law School has been officially published on Russian top law journal Правоведение (Open Journal of Legal Science, 1st issue in 2025) as the 5th article, with Yang Tianfang serving as its co-first author and corresponding author.

Link to the paper:

https://mp.weixin.qq.com/s/DSRfU47zfYCnbqCdy6EjTA

Achievement 6: Chemical bond management of FA-based mixed halide perovskites for stable and high-efficiency solar cells

Recently, Associate Processor Liu Na from the applied nanophotonics team of SMBU Faculty of Materials Science has made great research progress in perovskite solar cells, with the related research achievements published in the top journals in the material field- Advanced Energy Materials and Energy Material Advances.

Link to the paper:

https://mp.weixin.qq.com/s/VU3k9s5Mrpxj6Xf03CQqDg

Achievement 7: Global understanding via local extraction for data clustering and visualization

Recently, Processor Zhang Zhenyue from SMBU Faculty of Computational Mathematics and Cybernetics has made significant scientific breakthrough, publishing his research paper Global understanding via local extraction for data clustering and visualization on an international top academic journal - Patterns (CELL’s sub-journal) with himself as first author and Shenzhen MSU-BIT University as the primary affiliation. This research addressed the critical challenges of clustering and visualizing the complex unlabeled data. Through category-consistent local extraction, global propagation and self learning, the GULE framework proposed in the paper achieves the high-precision clustering such as cell type identification of RNA-seq data) and topology-preserving visualization, to provide new solutions for biomedicine and other fields and drive the discovery of multi-disciplinary data models.