A research collaboration between Xue Zhipeng (first author) and Li Chun (corresponding author) from Shenzhen MSU-BIT University's (SMBU) Joint Research Center in Applied Mathematics published a paper titled Uncertainty Quantification for Incomplete Multi-View Data Using Divergence Measures in IEEE Transactions on Image Processing (TIP), a flagship publication in the computer graphics field. With an impact factor of 13.7, TIP ranks as both a Q1 journal according to the Chinese Academy of Sciences classification and earns CCF Class A status from the China Computer Federation. Publication in this highly selective journal requires research that delivers significant theoretical and practical contributions to the field. This achievement highlights SMBU's strong research capabilities.

Figure 1: Journal and Indexing Information of the Paper

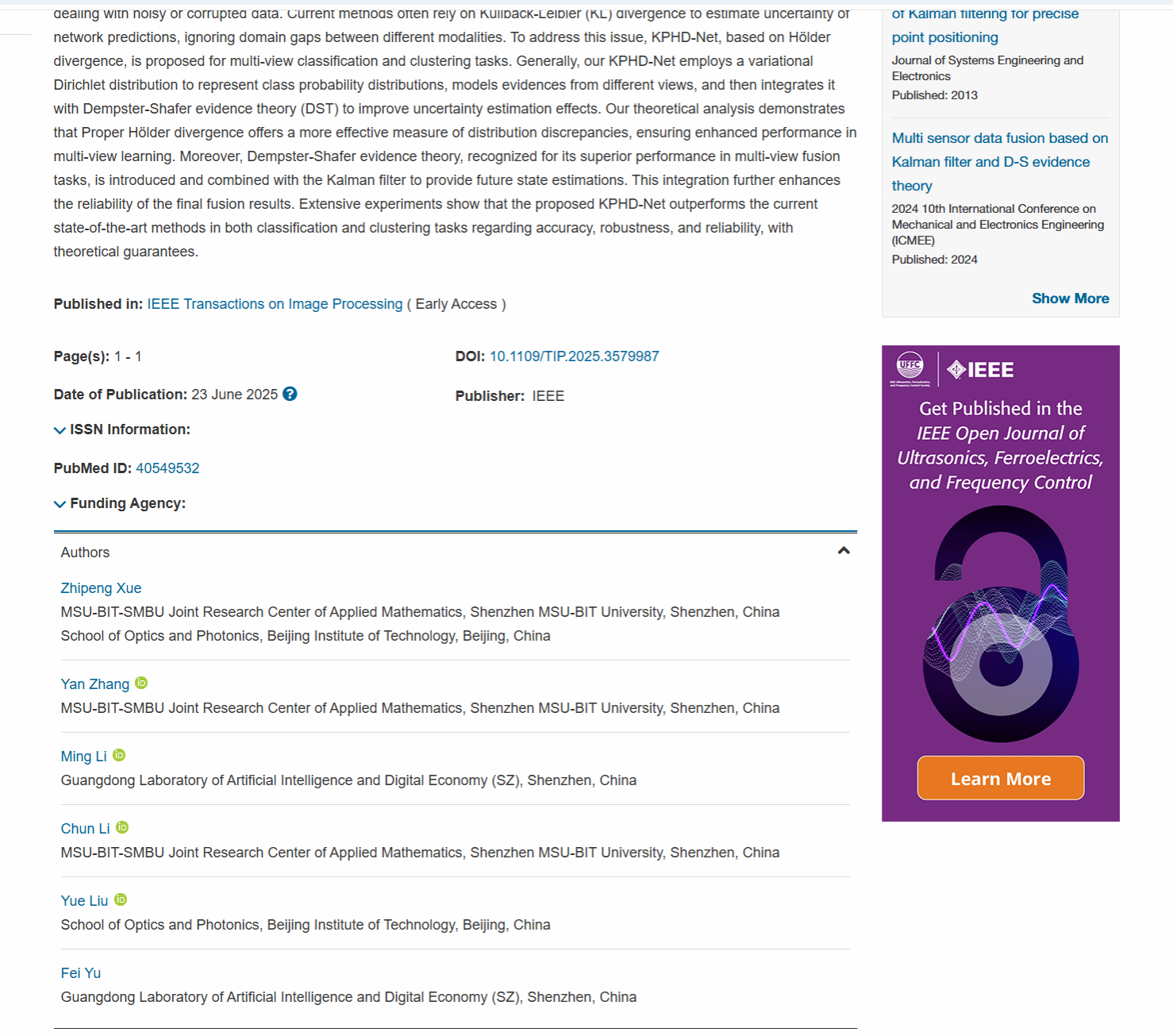

Current multi-view classification and clustering methods often rely on Kullback–Leibler Divergence while overlooking cross-modal discrepancies when handling noisy or incomplete views. In response to this limitation, the study introduces KPHD-Net, a model based on Proper Hölder Divergence (PHD). For the first time, KPHD-Net is the first to use variational Dirichlet distributions to represent class probability distributions. Through the integration of Dempster-Shafer evidence theory with Kalman filtering, the system enables dynamic evidence fusion and uncertainty quantification in multi-view learning. Both theoretical analysis and comprehensive experimental validation confirm that PHD provides superior measurement of discrepancies compared to traditional KLD, resulting in substantial improvements to the accuracy, robustness, and reliability of classification and clustering.

Figure 2: Overview of the Uncertainty Quantification Framework for Incomplete Multi-view Data

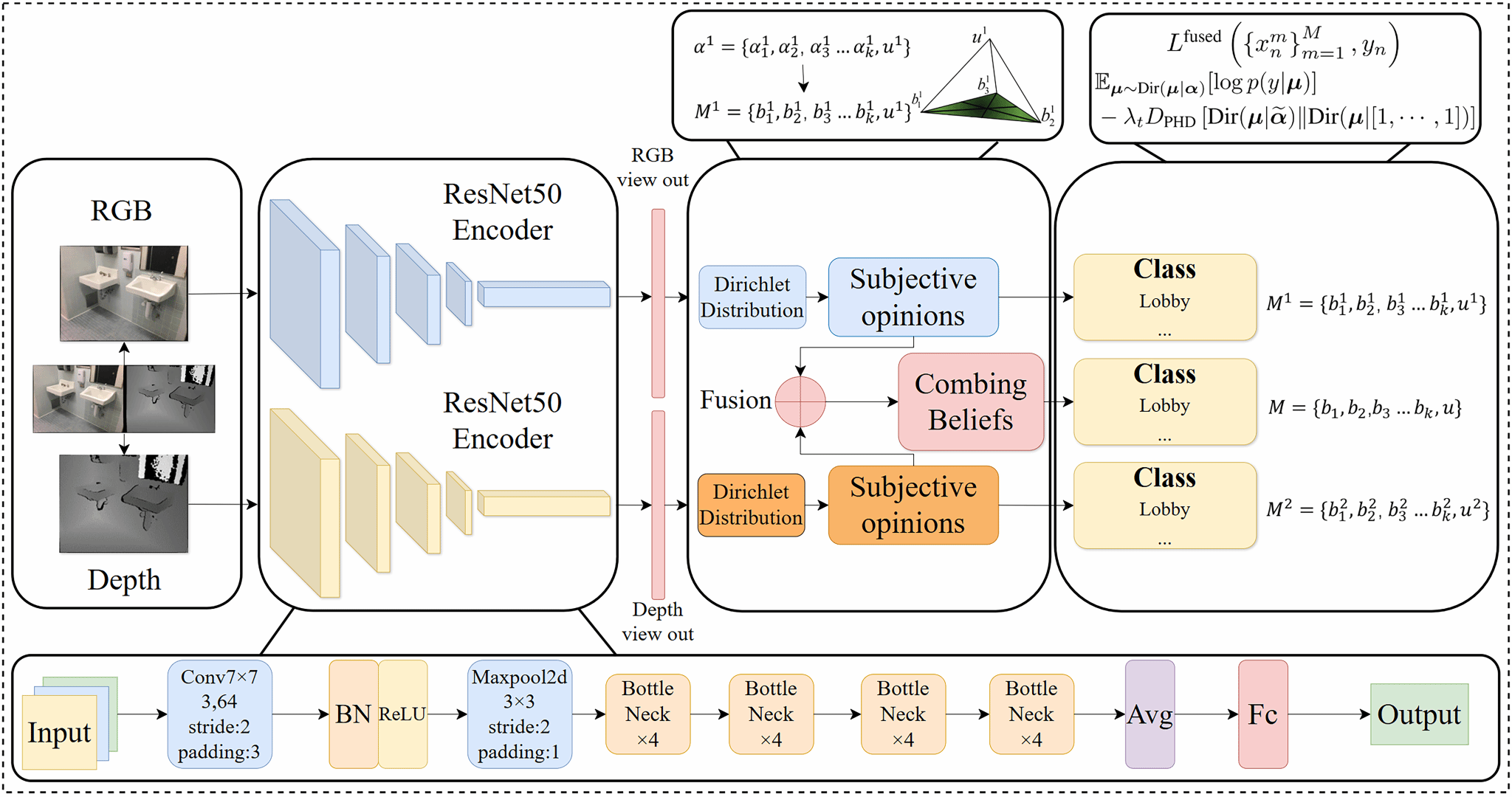

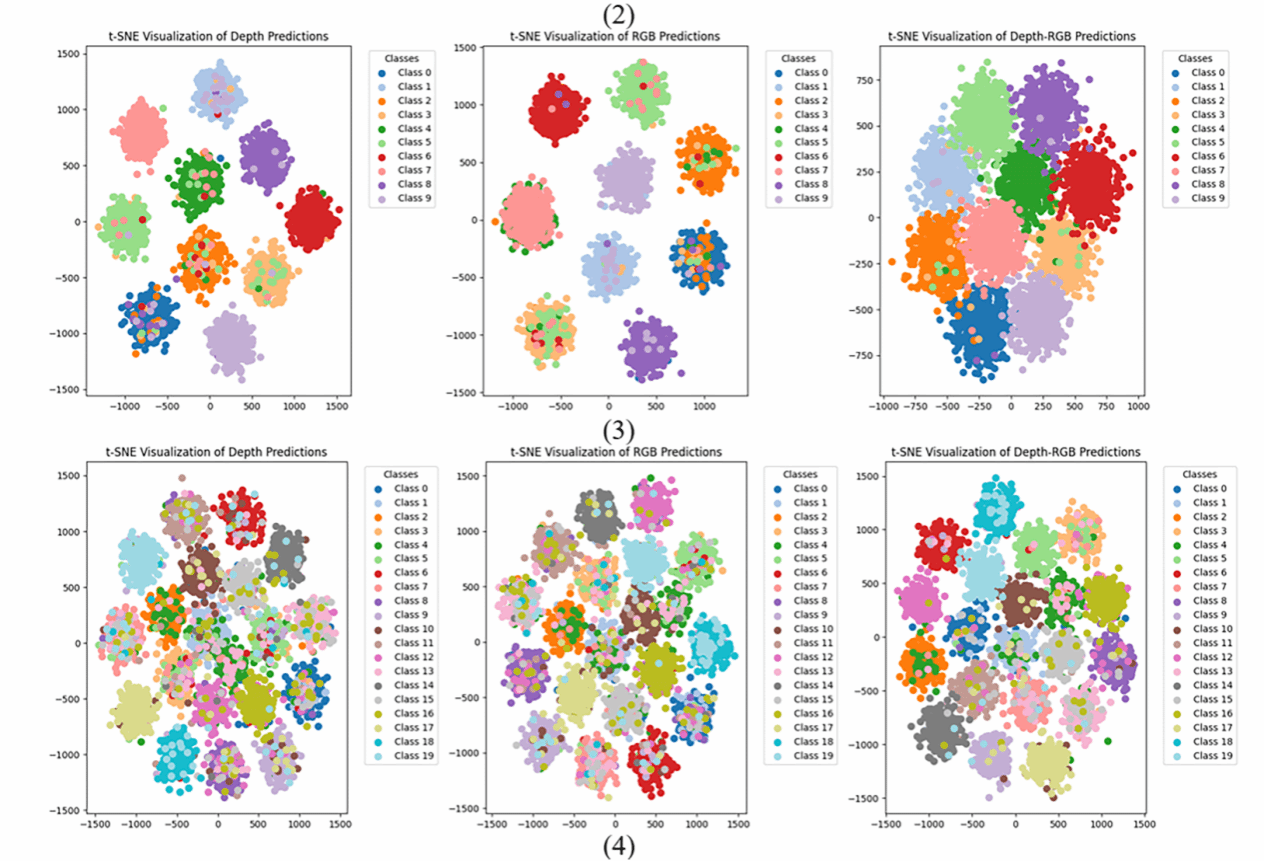

Comprehensive evaluation across multi-modal datasets such as ADE20K, Caltech101-7, and MSRC-v1, KPHD-Net revealed superior performance across varying noise levels and missing data ratios. The system achieved classification accuracy gains exceeding 5% while delivering markedly enhanced clustering robustness. This achievement represents both a novel uncertainty quantification framework for multi-view learning and establishes a theoretical and methodological foundation for future applications in medical imaging and intelligent perception.

Figure 3: T-SNE Visualization of Multi-view Clustering Results on Caltech101-7 and Caltech101-20 Datasets

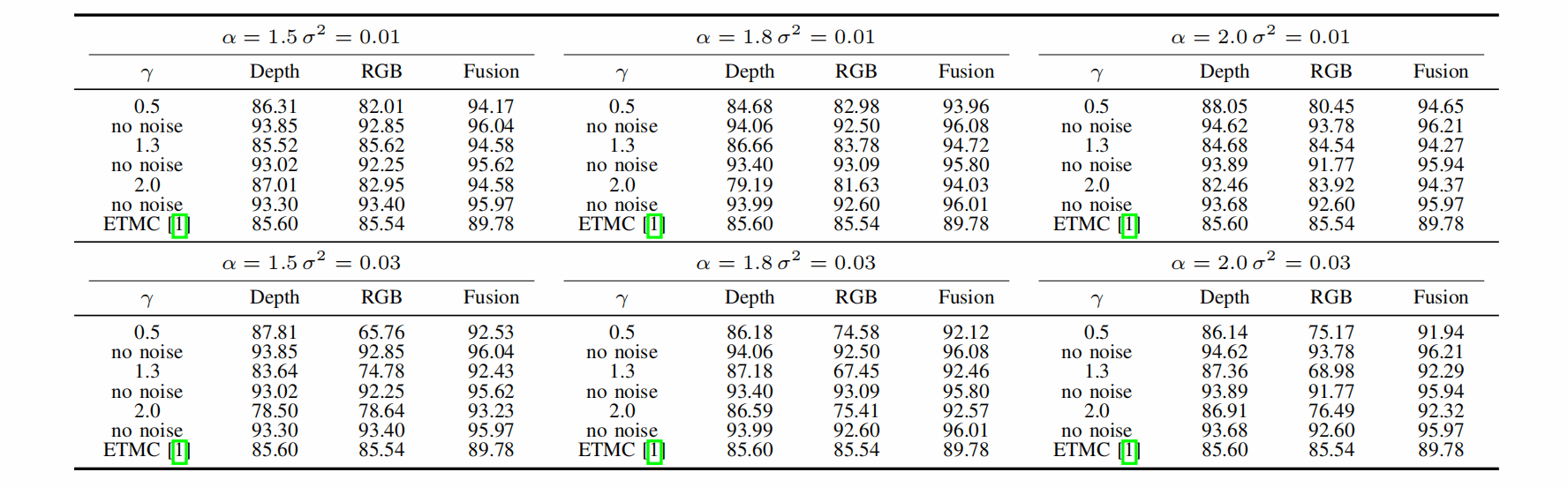

Figure 4: Summary of Classification Results on the ADE20K Dataset under Different Gaussian Noise Levels

The research presents KPHD-Net, a multi-view learning framework that combines Kalman filtering, PHD, and subjective logic. It performs uncertainty quantification in classification and clustering through dynamic evidence fusion. Extensive testing on datasets including ADE20K, MSRC-v1, and Caltech101-7/20 demonstrated KPHD-Net's consistent superiority over existing methods under various noise levels (e.g., σ²=0.03) and missing ratios, maintaining high accuracy and stability. The framework demonstrated consistent performance improvements across various backbone networks, including ResNet50, DenseNet, Mamba, and ViT, confirming its broad generalizability and reliability. This achievement not only establishes a new uncertainty quantification framework for multi-view learning but also strengthens the theoretical groundwork for applications in intelligent medical image analysis and intelligent perception. The team plans to further optimize the model to enhance its adaptability in complex scenarios and expand its application boundaries.

Under Li Chun's leadership, the team maintains a strong focus on academic research and practical application, specializing in cutting-edge fields such as medical imaging, AI vision technologies, graphics and image processing, and machine learning. The group has achieved breakthroughs in several key technologies and actively pursues the translation of research outcomes into clinical applications and industrial practices, consistently advancing innovation across related fields.

Paper link: https://ieeexplore.ieee.org/document/11045813